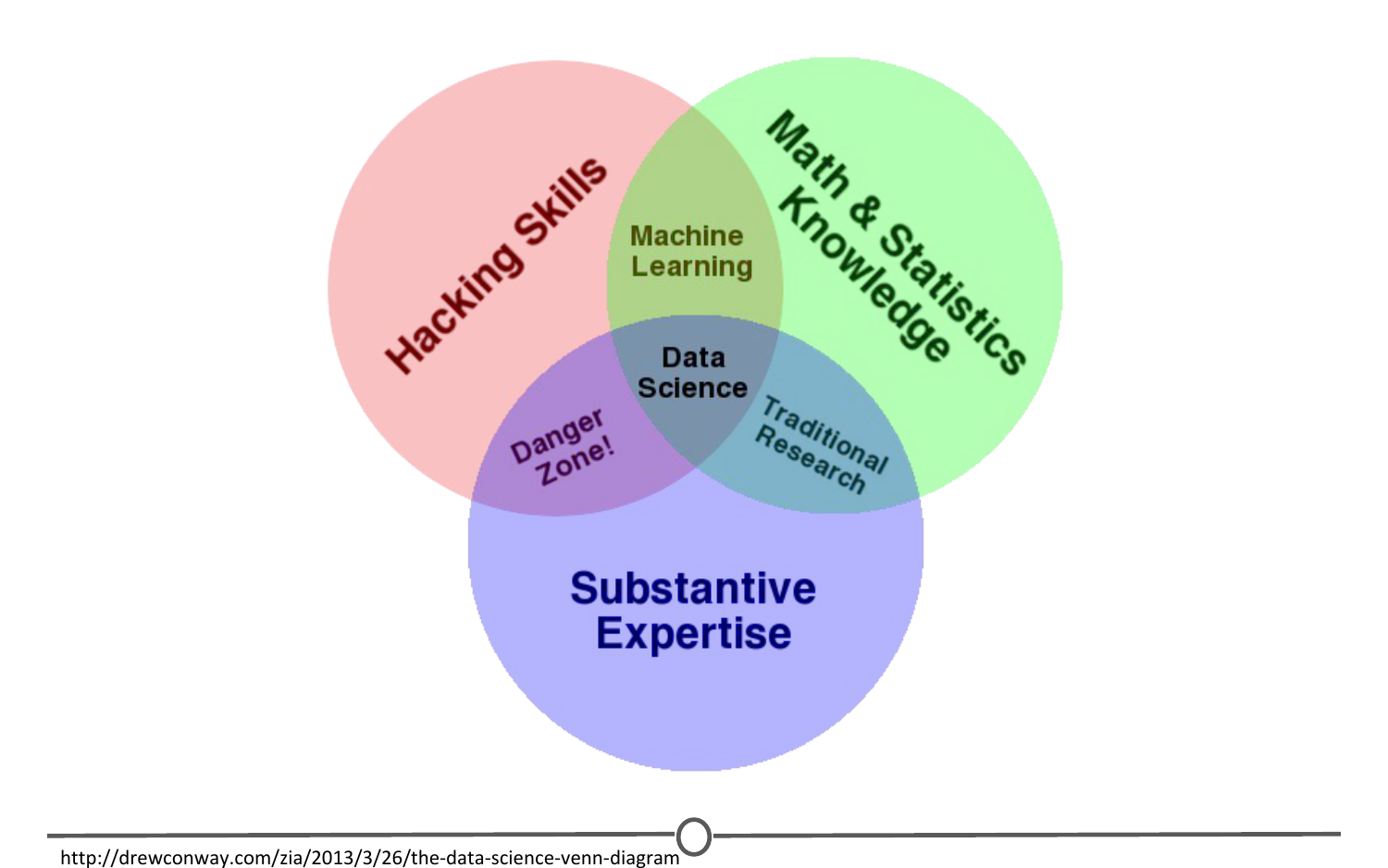

Analyst or Scientist

Before you get started, let’s go over a general breakdown of the skills needed for either a Data Analyst or a Data Scientist. My intentions are not to insult one (we know who you are) by downplaying your role, or confuse an Analyst with a Scientist. There is a clear distinction, so for the sake of being general let’s go through some skills needed if you are thinking about either.

Let’s start with the definition of data analysis: Data analysis is the collection, transformation, and organization of data in order to draw conclusions, make predictions, and drive informed decision-making.

Data Scientist

So what’s a data scientist, better yet what skills does a data scientist possess?

A data scientist needs to be somebody who knows how to find answers to novel problems. Someone “who combines the skills of software programmer, statistician and storyteller slash artist to extract the nuggets of gold hidden under mountains of data”

Analyst skills

Curiosity/Analytical Skills

The qualities and characteristics associated with solving problems using facts. Qualities and characteristics associated with using facts to solve problems

What kinds of questions would you ask based on the data and how it relates to the objectives of the EDA? Curiosity is critical here, because it will help you come up with questions you can answer

Technical Mindset

The analytical skill that involves breaking processes down into smaller steps and working with them in an orderly, logical way

As you have discovered, having a technical mindset means approaching problems (and datasets) in a systematic and logical manner. This starts with the way you clean, organize, and prepare your data. It can also guide the tools or software you use to break down data and help you identify and fix incorrect data that can skew your analysis.

Remember that problems aren’t always technical, but a technical mindset is the skill that you use to break down any complex issue into manageable parts. Focusing on implementing a process, regardless of what that looks like, is a great first step to exercising your technical mindset.

Data Design

The analytical skill that involves how you organize information

The skill of data design is an extension of your technical mindset. It deals with how information is organized. Suppose the dataset here is presented in a spreadsheet. You would be able to shift the cells to organize the data to find different patterns. For example, you might organize the data by revenue and then by genre, which could reveal that comedies are more profitable than dramas. Basically, how you choose to structure your data makes analysis easier and more insightful.

Understanding Context

The analytical skill that has to do with how you group things into categories

Context is crucial for any kind of meaningful data analysis. By contextualizing data, you start to understand why the data shows what it does. Factors including the time of year a movie is released, holidays, and competing events can all have an effect on revenue, which is the gauge Mega-Pik uses to determine success. Audience demographics such as age, gender, education, and income levels can help you understand who is going to the movies. This context might clarify which genres or storylines are most interesting to movie-goers.

Analysts determine context by looking for patterns or anomalies in a dataset. It also helps to understand the entertainment industry, which provides a whole other set of contextual clues. For example, family films typically generate more revenue when children are on vacation from school. This provides important context about the relationship between genre and revenue over a short timeframe. To understand the relationship between family films and revenue, you might have to search over a time period of more than one year to avoid inaccurate conclusions based on school schedule. Further the “season” in which children are on vacation from school differs by country, which is another contextual clue you have to take into account. An accurate analysis of this data needs to come from cross-referencing all of the various contexts, including external data or historical trends.

Understanding context helps you solve problems by narrowing down variables that are most likely to influence the outcome, which in turn enables you to come up with more meaningful insights.

Data Strategy

The analytical skill that involves managing the processes and tools used in data analysis

Data strategy is the management of the people, processes, and tools used in data analysis. In this scenario, think of it as the approach you use to analyze your dataset. One element might be the tools you use. If Mega-Pik wants a relatively simple dashboard, you might use Google Sheets or Excel because there are only a few columns of data. On the other hand, if Mega-Pik wants a dashboard where information updates every time new data comes in, you’d need a robust tool like Tableau.

The data strategy you select should be based on the dataset and the deliverables. Think about a data strategy as a kind of resource allocation—the tools, time, and effort that you put into a project will vary based on what you need to accomplish. One strategy you might use for this case study is to prioritize any analyses that would directly affect the next quarter’s revenue. The way you allocate resources can lead you to quicker, more actionable insights.

Analytical thinking

Now that you know the five essential skills of a data analyst, you’re ready to learn more about what it means to think analytically. People don’t often think about thinking.

Thinking is second nature to us. It just happens automatically, but there are actually many different ways to think. Some people think creatively, some think critically, and some people think in abstract ways. Let’s talk about analytical thinking.

Analytical thinking involves identifying and defining a problem and then solving it by using data in an organized, step-by-step manner.

As data analysts, how do we think analytically? The five aspects of analytical thinking are:

Visualization

In data analytics, visualization is the graphical representation of information. Some examples include graphs, maps, or other design elements. Visualization is important because visuals can help data analysts understand and explain information more effectively.

Strategy

Strategizing helps data analysts see what they want to achieve with the data and how they can get there. Strategy also helps improve the quality and usefulness of the data we collect. By strategizing, we know all our data is valuable and can help us accomplish our goals.

Problem-Orientated

Data analysts use a problem- oriented approach in order to identify, describe, and solve problems. It’s all about keeping the problem top of mind throughout the entire project.

For example, say a data analyst is told about the problem of a warehouse constantly running out of supplies. They would move forward with different strategies and processes.

But the number one goal would always be solving the problem of keeping inventory on the shelves.

Data analysts also ask a lot of questions. This helps improve communication and saves time while working on a solution. An example of that would be surveying customers about their experiences using a product and building insights from those questions to improve their product.

This leads us to the fourth quality of analytical thinking:

Correlation

Being able to identify a correlation between two or more pieces of data. A correlation is like a relationship. You can find all kinds of correlations in data. Maybe it’s the relationship between the length of your hair and the amount of shampoo you need. Or maybe you notice a correlation between a rainier season leading to a high number of umbrellas being sold.

But as you start identifying correlations in data, there’s one thing you always want to keep in mind:

Correlation does not equal causation. In other words, just because two pieces of data are both trending in the same direction, that doesn’t necessarily mean they are all related.

Big Picture Thinking

This means being able to see the big picture as well as the details. A jigsaw puzzle is a great way to think about this. Big-picture thinking is like looking at a complete puzzle.

You can enjoy the whole picture without getting stuck on every tiny piece that went into making it.

If you only focus on individual pieces, you wouldn’t be able to see past that, which is why big-picture thinking is so important. It helps you zoom out and see possibilities and opportunities. This leads to exciting new ideas or innovations.

On the flip side, detail-oriented thinking is all about figuring out all of the aspects that will help you execute a plan. In other words, the pieces that make up your puzzle.

Root cause

Let’s talk about some of the questions data analysts ask when they’re on the hunt for a solution. Here’s one that will come up a lot:

What is the root cause of a problem?

A root cause is the reason why a problem occurs. If we can identify and get rid of a root cause, we can prevent that problem from happening again.

A simple way to wrap your head around root causes is with the process called the Five Whys. The five whys is a simple but effective technique for identifying a root cause. It involves asking “Why?” repeatedly until the answer reveals itself. This often happens at the fifth “why,” but sometimes you’ll need to continue asking more times, sometimes fewer.

Five Whys

In the Five Whys you ask “why” five times to reveal the root cause. The fifth and final answer should give you some useful and sometimes surprising insights.

Here’s an example of the Five Whys in action. Let’s say you wanted to make a blueberry pie but couldn’t find any blueberries. You’ve been trying to solve a problem by asking,

why can’t I make a blueberry pie? The answer will be, there are no blueberries at the store. There’s Why Number 1.

You then ask, why were there no blueberries at the store? Then you discover that the blueberry bushes don’t have enough fruit this season. That’s Why Number 2.

Next, you’d ask, why was there not enough fruit? This would lead to the fact that birds were eating all the berries. Why Number 3, asked and answered.

Now we get to Why Number 4. Ask why a fourth time and the answer would be that, although the birds normally prefer mulberries and don’t eat blueberries, the mulberry bush didn’t produce fruit this season, so the birds are eating blueberries instead.

Finally, we get to Why Number 5, which should reveal the root cause. A late frost damaged the mulberry bushes, so it didn’t produce any fruit.

You can’t make a blueberry pie because of the late frost months ago. See how the Five Whys can reveal some very surprising root causes. This is a great trick to know, and it can be a very helpful process in data analysis.

Examples

Boost customer service

An online grocery store was receiving numerous customer service complaints about poor deliveries. To address this problem, a data analyst at the company asked their first “why?”

Why #1. “Customers are complaining about poor grocery deliveries. Why?”

The data analyst began by reviewing the customer feedback more closely. They noted the vast majority of complaints dealt with products arriving damaged. So, they asked “why?” again.

Why #2. “Products are arriving damaged. Why?”

To answer this question, the data analyst continued exploring the customer feedback. It turned out that many customers said products were not packaged properly.

Why #3. “Products are not packaged properly. Why?”

After asking their third “why,” the data analyst did some further detective work. They ultimately learned that their company’s grocery packers were not adequately trained on packing procedures.

Why #4. “Grocery packers are not adequately trained. Why?”

This “why” enabled the data analyst to uncover that nearly 35% of all packers were new to the company. They had not yet had the chance to complete all required training, yet they were already being asked to pack groceries for customer orders.

Why #5. “Packers have not completed required training. Why?”

This final “why?” led the data analyst to find out that the human resources department had not provided necessary training to any newly hired packers. This was because HR was in the middle of reworking the training program. Rather than training new hires using the old system, they had provided them with a quick one-page guide, which was insufficient.

So, in this example, the root cause of the problem was that HR had not completed the training program updates and was using a less-thorough guide to train new packers. Fortunately, this was a problem that the grocer could control. And thanks to the data analyst’s work, they provided more support to the HR department to complete the training and retrain all newly hired grocery packers!

Advance quality control

An irrigation company was experiencing an increase in the number of defects in their water pumps. The company’s data team used the five whys to analyze the situation:

Why #1. “There has been an increase in the number of defects in water pumps. Why?”

To answer this question, the data team set up a meeting with shop floor engineers. They asked for some insights into machine performance and manufacturing processes. After some exploration, it was discovered that the machines used to produce the pumps were not properly calibrated.

Why #2. “The machines are not properly calibrated. Why?”

After more brainstorming with the engineering team, it was determined that the machines were miscalibrated during the last maintenance cycle.

Why #3. “The machines were miscalibrated during maintenance. Why?”

Next, the data team investigated the procedures involved with machine calibration. They found out that the current method was inappropriate for the machines.

Why #4. “The calibration method is inappropriate for the machines. Why?”

This “why” led them to discover that the company had recently installed new software in their machines. Because it was a minor software upgrade, the engineers didn’t realize it would affect calibration. They didn’t have the information they needed to properly calibrate the upgraded machines.

Why #5. “The engineers don’t have the information they need to calibrate the upgraded machines. Why?”

The fifth and final “why” turned up even more evidence: The installation team had upgraded machine software, but had failed to share the corresponding calibration procedures with the engineers.

So, in this example, the root cause of the problem was that the engineers lacked important information about how to calibrate the machines using the new software system. The solution was found, and the irrigation company was able to implement it right away. Soon, the engineers had the necessary calibration instructions, and the pump defects were eliminated!

Gap Analysis

Another question commonly asked by data analysts is, where are the gaps in our process? For this, many people will use something called gap analysis.

Gap analysis lets you examine and evaluate how a process works currently in order to get where you want to be in the future.

Businesses conduct gap analysis to do all kinds of things, such as improve a product or become more efficient. The general approach to gap analysis is understanding where you are now compared to where you want to be.

Then you can identify the gaps that exist between the current and future state and determine how to bridge them.

Not Before

A third question that data analysts ask a lot is, what did we not consider before?

This is a great way to think about what information or procedure might be missing from a process, so you can identify ways to make better decisions and strategies moving forward.

These are just a few examples of the kinds of questions data analysts use at their jobs every day.

Data life cycle

Plan

Decide what kind of data is needed, how it will be managed, and who will be responsible for it.

Capture

Collect or bring in data from a variety of different sources.

Manage

Care for and maintain the data. This includes determining how and where it is stored and the tools used to do so.

Analyze

Use the data to solve problems, make decisions, and support business goals.

Archive

Keep relevant data stored for long-term and future reference.

Destroy

Remove data from storage and delete any shared copies of the data.

Tools

Spreadsheets

Data analysts rely on spreadsheets to collect and organize data. Two popular spreadsheet applications you will probably use a lot in your future role as a data analyst are Microsoft Excel and Google Sheets.

Spreadsheets structure data in a meaningful way by letting you

Collect, store, organize, and sort information

Identify patterns and piece the data together in a way that works for each specific data project

Create excellent data visualizations, like graphs and charts.

Attribute

A characteristic or quality of data used to label a column in a table

Function

A preset command that automatically performs a specified process or task using the data in a spreadsheet

Observation

The attributes that describe a piece of data contained in a row of a table

Oversampling

The process of increasing the sample size of nondominant groups in a population. This can help you better represent them and address imbalanced datasets

Databases

A database is a collection of structured data stored in a computer system. Some popular Structured Query Language (SQL) programs include MySQL, Microsoft SQL Server, and BigQuery.

SQL

Query languages

Allow analysts to isolate specific information from a database(s)

Make it easier for you to learn and understand the requests made to databases

Allow analysts to select, create, add, or download data from a database for analysis

Structured Query Language (or SQL, often pronounced “sequel”) enables data analysts to talk to their databases. SQL is one of the most useful data analyst tools, especially when working with large datasets in tables. It can help you investigate huge databases, track down text (referred to as strings) and numbers, and filter for the exact kind of data you need—much faster than a spreadsheet can.

If you haven’t used SQL before, this reading will help you learn the basics so you can appreciate how useful SQL is and how useful SQL queries are in particular. You will be writing SQL queries in no time at all.

Visualization tools

Data analysts use a number of visualization tools, like graphs, maps, tables, charts, and more. Two popular visualization tools are Tableau and Looker.

These tools

Turn complex numbers into a story that people can understand

Help stakeholders come up with conclusions that lead to informed decisions and effective business strategies

Have multiple features

- Tableau’s simple drag-and-drop feature lets users create interactive graphs in dashboards and worksheets

- Looker communicates directly with a database, allowing you to connect your data right to the visual tool you choose

Steps to plan a viz

One day, you learn that your company is getting ready to make a major update to its website. To guide decisions for the website update, you’re asked to analyze data from the existing website and sales records. Let’s go through the steps you might follow.

Step 1: Explore the data for patterns

First, you ask your manager or the data owner for access to the current sales records and website analytics reports. This includes information about how customers behave on the company’s existing website, basic information about who visited, who bought from the company, and how much they bought.

While reviewing the data you notice a pattern among those who visit the company’s website most frequently: geography and larger amounts spent on purchases. With further analysis, this information might explain why sales are so strong right now in the northeast—and help your company find ways to make them even stronger through the new website.

Step 2: Plan your visuals

Next it is time to refine the data and present the results of your analysis. Right now, you have a lot of data spread across several different tables, which isn’t an ideal way to share your results with management and the marketing team. You will want to create a data visualization that explains your findings quickly and effectively to your target audience. Since you know your audience is sales oriented, you already know that the data visualization you use should:

Show sales numbers over time

Connect sales to location

Show the relationship between sales and website use

Show which customers fuel growth

Step 3: Create your visuals

Now that you have decided what kind of information and insights you want to display, it is time to start creating the actual visualizations. Keep in mind that creating the right visualization for a presentation or to share with stakeholders is a process. It involves trying different visualization formats and making adjustments until you get what you are looking for. In this case, a mix of different visuals will best communicate your findings and turn your analysis into the most compelling story for stakeholders. So, you can use the built-in chart capabilities in your spreadsheets to organize the data and create your visuals.

Data visualization toolkit

There are many different tools you can use for data visualization.

You can use the visualizations tools in your spreadsheet to create simple visualizations such as line and bar charts.

You can use more advanced tools such as Tableau that allow you to integrate data into dashboard-style visualizations.

If you’re working with the programming language R you can use the visualization tools in RStudio.

Your choice of visualization will be driven by a variety of drivers including the size of your data, the process you used for analyzing your data (spreadsheet, or databases/queries, or programming languages). For now, just consider the basics.

Spreadsheets (Microsoft Excel or Google Sheets)

In our example, the built-in charts and graphs in spreadsheets made the process of creating visuals quick and easy. Spreadsheets are great for creating simple visualizations like bar graphs and pie charts, and even provide some advanced visualizations like maps, and waterfall and funnel diagrams (shown in the following figures).

But sometimes you need a more powerful tool to truly bring your data to life. Tableau and RStudio are two examples of widely used platforms that can help you plan, create, and present effective and compelling data visualizations.

Tableau

Tableau is a popular data visualization tool that lets you pull data from nearly any system and turn it into compelling visuals or actionable insights. The platform offers built-in visual best practices, which makes analyzing and sharing data fast, easy, and (most importantly) useful. Tableau works well with a wide variety of data and includes an interactive dashboard that lets you and your stakeholders click to explore the data interactively.

You can start exploring Tableau from the How-to Video resources. Tableau Public is free, easy to use, and full of helpful information. The Resources page is a one-stop-shop for how-to videos, examples, and datasets for you to practice with. To explore what other data analysts are sharing on Tableau, visit the Viz of the Day page where you will find beautiful visuals ranging from the Hunt for (Habitable) Planets to Who’s Talking in Popular Films.

Programming languages

A lot of data analysts work with a programming language called R. Most people who work with R end up also using RStudio, an integrated developer environment (IDE), for their data visualization needs. As with Tableau, you can create dashboard-style data visualizations using RStudio. I’ll be using RStudio for most of this chapter.

Data fairness

Previously, you learned that part of a data professional’s responsibility is to make certain that their analysis is fair. Fairness means ensuring your analysis doesn’t create or reinforce bias. This can be challenging, but if the analysis is not objective, the conclusions can be misleading and even harmful. In this reading, you’re going to explore some best practices you can use to guide your work toward a more fair analysis!

Consider fairness

Following are some strategies that support fair analysis:

| Best practice | Explanation | Example |

|---|---|---|

| Consider all of the available data | Part of your job as a data analyst is to determine what data is going to be useful for your analysis. Often there will be data that isn’t relevant to what you’re focusing on or doesn’t seem to align with your expectations. But you can’t just ignore it; it’s critical to consider all of the available data so that your analysis reflects the truth and not just your own expectations. | A state’s Department of Transportation is interested in measuring traffic patterns on holidays. At first, they only include metrics related to traffic volumes and the fact that the days are holidays. But the data team realizes they failed to consider how weather on these holidays might also affect traffic volumes. Considering this additional data helps them gain more complete insights. |

| Identify surrounding factors | As you’ll learn throughout these courses, context is key for you and your stakeholders to understand the final conclusions of any analysis. Similar to considering all of the data, you also must understand surrounding factors that could influence the insights you’re gaining. | A human resources department wants to better plan for employee vacation time in order to anticipate staffing needs. HR uses a list of national bank holidays as a key part of the data-gathering process. But they fail to consider important holidays that aren’t on the bank calendar, which introduces bias against employees who celebrate them. It also gives HR less useful results because bank holidays may not necessarily apply to their actual employee population. |

| Include self-reported data | Self-reporting is a data collection technique where participants provide information about themselves. Self-reported data can be a great way to introduce fairness in your data collection process. People bring conscious and unconscious bias to their observations about the world, including about other people. Using self-reporting methods to collect data can help avoid these observer biases. Additionally, separating self-reported data from other data you collect provides important context to your conclusions! | A data analyst is working on a project for a brick-and-mortar retailer. Their goal is to learn more about their customer base. This data analyst knows they need to consider fairness when they collect data; they decide to create a survey so that customers can self-report information about themselves. By doing that, they avoid bias that might be introduced with other demographic data collection methods. For example, if they had sales associates report their observations about customers, they might introduce any unconscious bias the employees had to the data. |

| Use oversampling effectively | When collecting data about a population, it’s important to be aware of the actual makeup of that population. Sometimes, oversampling can help you represent groups in that population that otherwise wouldn’t be represented fairly. Oversampling is the process of increasing the sample size of nondominant groups in a population. This can help you better represent them and address imbalanced datasets. | A fitness company is releasing new digital content for users of their equipment. They are interested in designing content that appeals to different users, knowing that different people may interact with their equipment in different ways. For example, part of their user-base is age 70 or older. In order to represent these users, they oversample them in their data. That way, decisions they make about their fitness content will be more inclusive. |

| Think about fairness from beginning to end | To ensure that your analysis and final conclusions are fair, be sure to consider fairness from the earliest stages of a project to when you act on the data insights. This means that data collection, cleaning, processing, and analysis are all performed with fairness in mind. | A data team kicks off a project by including fairness measures in their data-collection process. These measures include oversampling their population and using self-reported data. However, they fail to inform stakeholders about these measures during the presentation. As a result, stakeholders leave with skewed understandings of the data. Learning from this experience, they add key information about fairness considerations to future stakeholder presentations. |

Key takeaways

As a data professional, you will need to ensure you always consider fairness. This will allow you to avoid creating or reinforcing bias or accidentally drawing misleading conclusions. Using these best practices can help guide your analysis and make you a better data professional!

UndefinedData

Definition

First up, we’ll look at the Cambridge English Dictionary, which states that data is:

Information, especially facts or numbers, collected to be examined and considered and used to help decision-making.

Second, we’ll look at the definition provided by Wikipedia, which is:

A set of values of qualitative or quantitative variables.

Set of values

Wikepedia definition focuses on what data entails: So, the first thing to focus on is “a set of values” - to have data, you need a set of items to measure from. In statistics, this set of items is often called the population. The set as a whole is what you are trying to discover something about. For example, that set of items required to answer your question might be all websites or it might be the set of all people coming to websites, or it might be a set of all people getting a particular drug. But in general, it’s a set of things that you’re going to make measurements on.

Variables

The next thing to focus on is “variables” - variables are measurements or characteristics of an item. For example, you could be measuring the height of a person, or you are measuring the amount of time a person stays on a website. On the other hand, it might be a more qualitative characteristic you are trying to measure, like what a person clicks on on a website, or whether you think the person visiting is male or female.

Qualitative

Qualitative variables are, unsurprisingly, information about qualities. They are things like country of origin, sex, or treatment group. They’re usually described by words, not numbers, and they are not necessarily ordered.

Quantitative

Quantitative variables on the other hand, are information about quantities. Quantitative measurements are usually described by numbers and are measured on a continuous, ordered scale; they’re things like height, weight and blood pressure.

Data types

Structured data is rarely how data is presented to you,

Types of messy data

Here are just some of the data sources you might encounter and we’ll briefly look at what a few of these data sets often look like or how they can be interpreted, but one thing they have in common is the messiness of the data - you have to work to extract the information you need to answer your question.

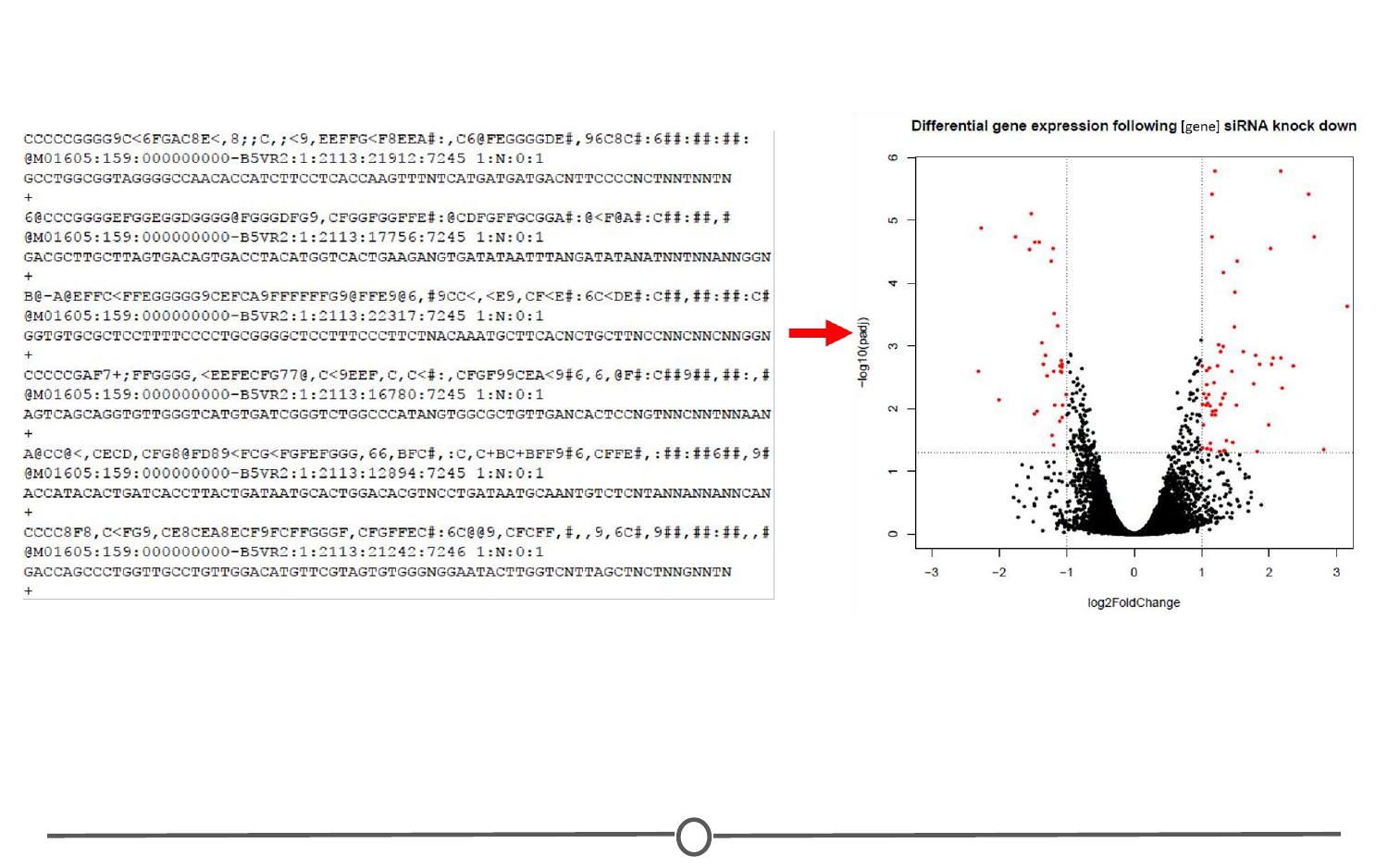

Sequencing data

This data is generally first encountered in the FASTQ format, the raw file format produced by sequencing machines. These files are often hundreds of millions of lines long, and it is our job to parse this into an understandable and interpretable format and infer something about that individual’s genome.

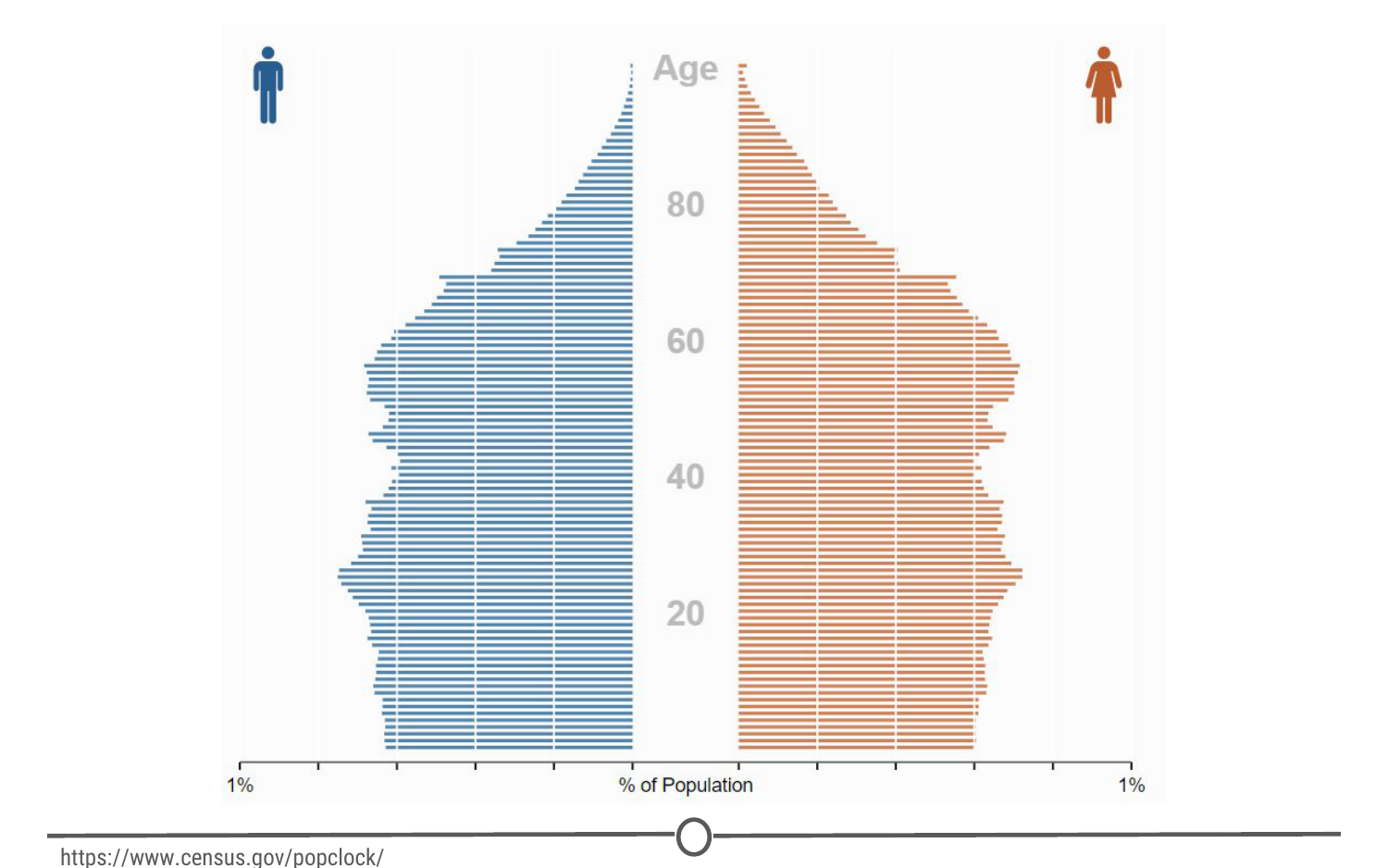

Population census data

One rich source of information is country wide censuses. In these, almost all members of a country answer a set of standardized questions and submit these answers to the government. When you have that many respondants, the data is large and messy; but once this large database is ready to be queried, the answers embedded are important.Here we have a very basic result of the last US census - in which all respondants are divided by sex and age, and this distribution is plotted in this population pyramid plot

Electronic medical records (EMR)

And other large datasets.

Geographic information system (GIS)

Data (mapping)

Image analysis and image extrapolation

Language and translations

Website traffic

Personal/Ad data

(eg: Facebook, Netflix predictions, etc)

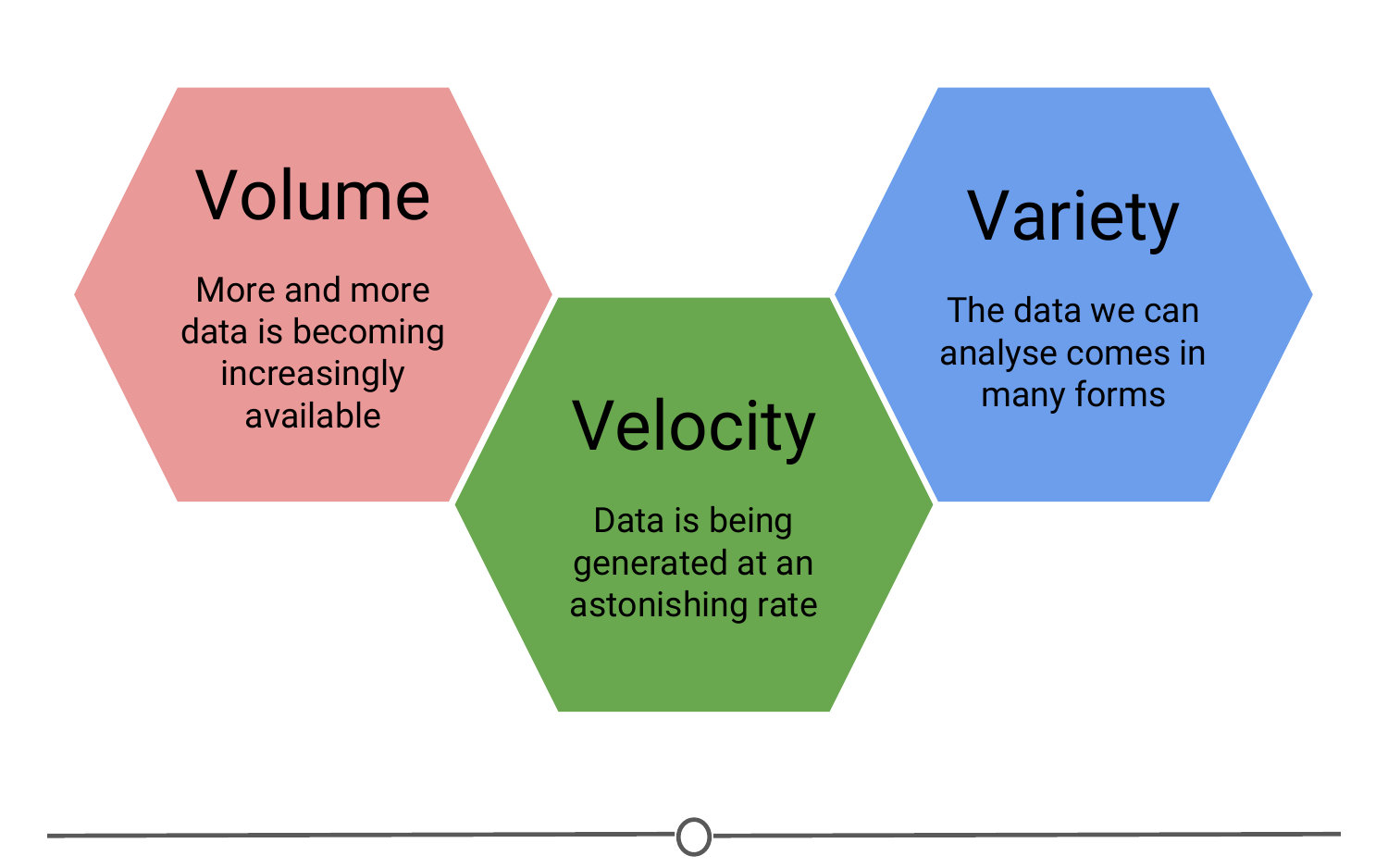

Big data

There are a few qualities that characterize big data. The first is

Volume

As the name implies, big data involves large datasets - and these large datasets are becoming more and more routine. For example, say you had a question about online video - well, YouTube has approximately 300 hours of video uploaded every minute! You would definitely have a lot of data available to you to analyse, but you can see how this might be a difficult problem to wrangle all of that data!

And this brings us to the second quality of big data:

Velocity

Data is being generated and collected faster than ever before. In our YouTube example, new data is coming at you every minute! In a completely different example, say you have a question about shipping times or routes. Well, most transport trucks have real time GPS data available - you could in real time analyse the trucks movements… if you have the tools and skills to do so! The third quality of big data is

Variety

In the examples I’ve mentioned so far, you have different types of data available to you. In the YouTube example, you could be analysing video or audio, which is a very unstructured data set, or you could have a database of video lengths, views or comments, which is a much more structured dataset to analyse.

Types of projects

Now that we have a general idea of what one is, what type of projects does one take on? (well let’s stay within reason here).

What does an actual data science project look like? Let’s step through an actual data science project.

Question

Every Data Science Project starts with a question that is to be answered with data.

It means that forming the question is an important first step in the process.

Generate data

The second step is finding or generating the data you’re going to use to answer that question.

Analyze data

With the question solidified and data in hand, the data are then analyzed, first by

Exploring the data

and then often by

Modeling the data

which means using some statistical or machine learning techniques to analyze the data and answer your question.

Communicate

After drawing conclusions from this analysis, the project has to be communicated to others. Sometimes this is a report you send to your boss or team at work. Other times it’s a blog post. Often it’s a presentation to a group of colleagues. Regardless, a data science project almost always involves some form of communication of the projects’ findings.

R for Data Science

I’ve worked with python for years, as well as R. I will eventually have more time to create a book similar to this but for Python.