# Once the server is started - Download the Script

wget https://cf-courses-data.s3.us.cloud-object-storage.appdomain.cloud/IBM-DB0231EN-SkillsNetwork/labs/Final%20Assignment/postgres-setup.shExecute Bash file

User Management - PostgreSQL

Scenario

For this project you will assume the role of database administrator at a data analytics consulting company. You have been assigned to a project where you need to setup, test and optimize the data platform. The platform includes various on-premises database servers like MySQL, PostgreSQL, and an open-source tool such as Datasette, as well as optional cloud-based databases like IBM DB2. Your job is to configure, tune, secure, backup and monitor those databases and keep them running at peak performance.

This is a three-part assignment.

Part 1: You will work with PostgreSQL server and perform the User Management tasks and handle the backup of the databases

Part 2: You will work with MySQL server and perform the tasks like configuration check, recovery of data. You will use indexing to improve the database performance. You will identify which storage engines are supported by the server and which table uses which storage engine. Optionally you will also automate backup tasks.

Part 3: You will be working with an open-source SQLite-based tool, Datasette, or optionally on a cloud instance of an IBM DB2 server, to perform tasks such as data restoration, index creation to enhance query performance, and creating views to simplify query writing.

Grading Criteria

There are a total of 20 points possible for this final project.

Your final assignment will be graded by your peers who are also completing this assignment within the same session. Your grade will be based on the following tasks:

- Task 1.1 - Find the settings in PostgreSQL (1 pts)

- Task 1.2 - Create an User (1 pts)

- Task 1.3 - Create a Role (1 pts)

- Task 1.4 - Grant privileges to the role (2 pts)

- Task 1.5 - Grant role to an user (1 pts)

- Task 1.6 - Backup a database on PostgreSQL server (1 pts)

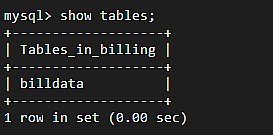

- Task 2.2.1 - Restore MySQL server using a previous backup (1 pts)

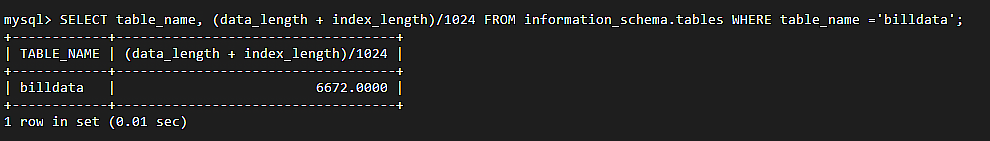

- Task 2.2.2 - Find the table data size (1 pts)

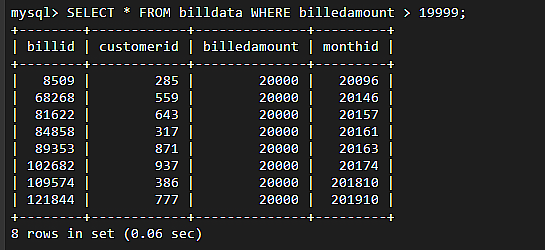

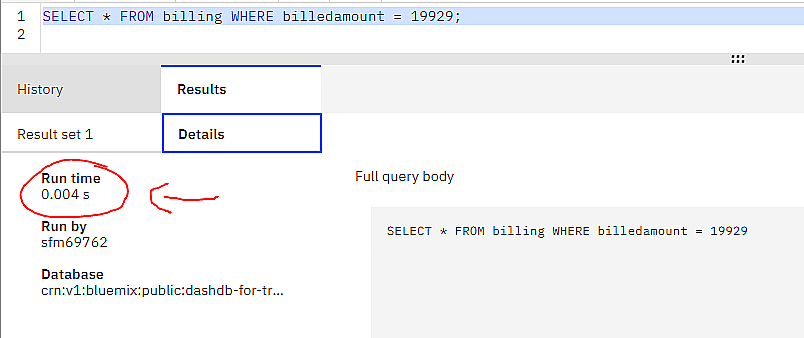

- Task 2.3.1 - Baseline query performance (1 pts)

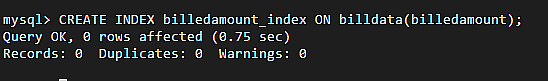

- Task 2.3.2 - Create an index. (1 pts)

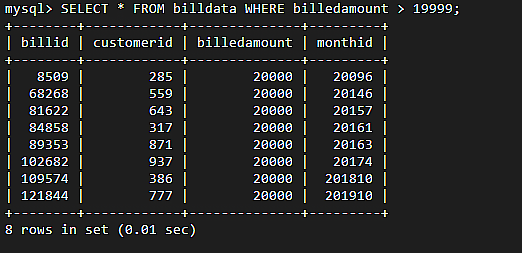

- Task 2.3.3 - Document the improvement in query performance. (1 pts)

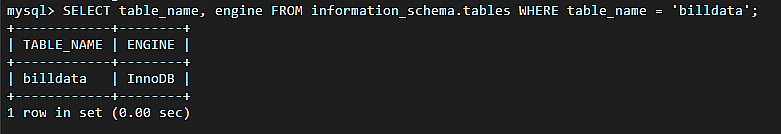

- Task 2.4.1 - Find supported storage engines (1 pts)

- Task 2.4.2 - Find the storage engine of a table (1 pts)

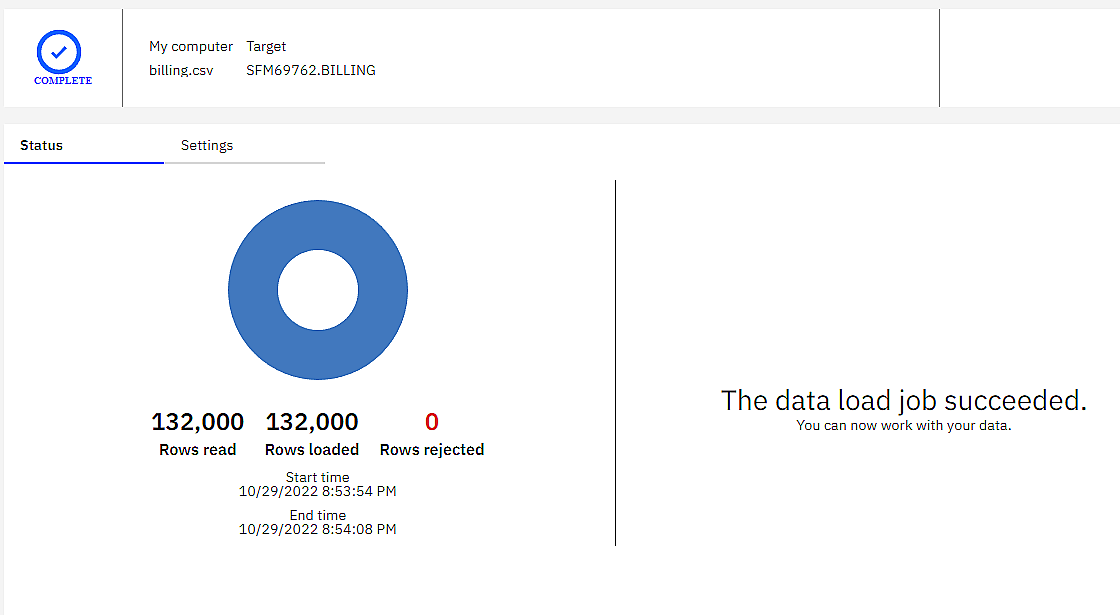

- Task 3.1 - Restore the table billing. (2 pts)

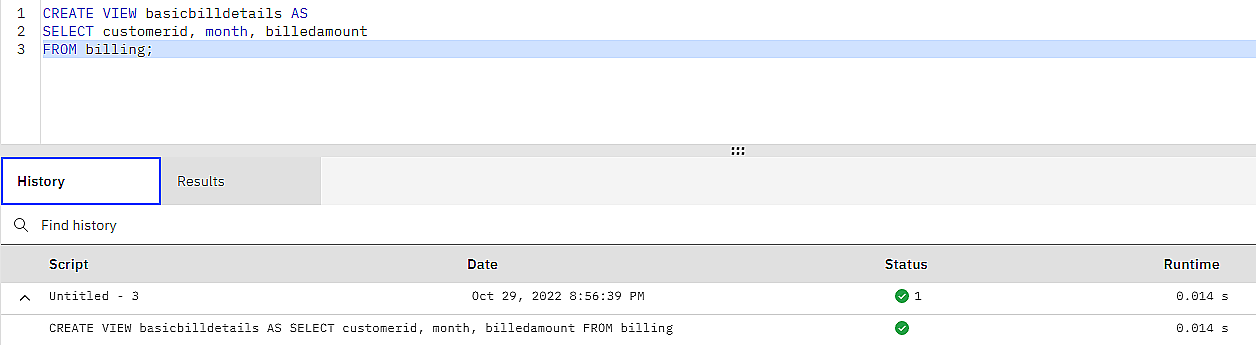

- Task 3.2 - Create a view named basicbilldetails with the columns customerid, month, billedamount. (1 pts)

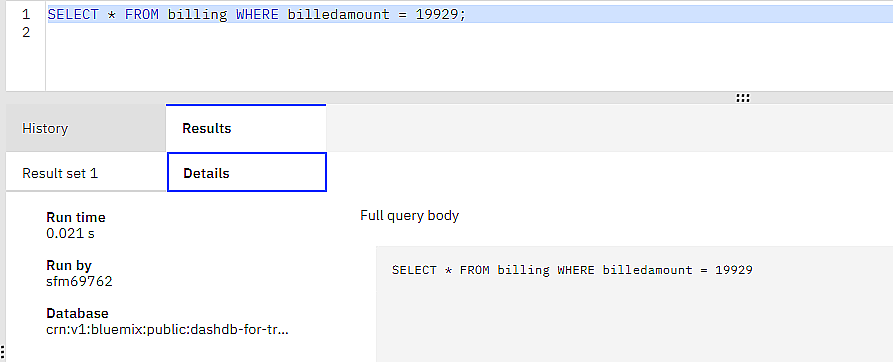

- Task 3.3 - Baseline query performance. (1 pts)

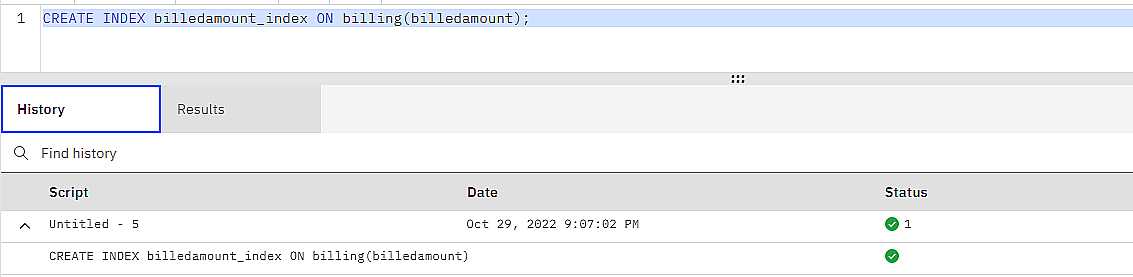

- Task 3.4 - Create an index. (1 pts)

- Task 3.5 - Document the improvement in query performance. (1 pts)

Project

Let’s pretend you’re the DBA so you’ll need to perform user management tasks and handle the backup of the databases on a PostgreSQL server

Tasks

- Installation & Provisioning

- Configuration

- User Mangement

- Backup

Setup

We will be using an open-source cloud IDE platform to access the PostgreSQL database which is running in a Docker container.

Database Script

Download db Script

- Start the PostgreSQL Server - Create an instance on the cloud

- Download the db setup file from: https://cf-courses-data.s3.us.cloud-object-storage.appdomain.cloud/IBM-DB0231EN-SkillsNetwork/labs/Final%20Assignment/postgres-setup.sh

# Create Database by executing the bash file

bash postgres-setup.shHere is what the bash file contained

# Here is the content of the .sh file:

#download the data file

wget https://cf-courses-data.s3.us.cloud-object-storage.appdomain.cloud/IBM-DB0231EN-SkillsNetwork/labs/Final%20Assignment/vehicle-data.csv

#download the sql file

wget https://cf-courses-data.s3.us.cloud-object-storage.appdomain.cloud/IBM-DB0231EN-SkillsNetwork/labs/Final%20Assignment/setup.sql

#run the sql file

psql --username=postgres --host=localhost -f setup.sql

#import the csv file

cat vehicle-data.csv | psql --username=postgres -d tolldata --host=localhost -c "copy toll.tolldata from STDIN WITH (FORMAT csv);"Here is the setup.sql script

CREATE DATABASE tolldata;

\c tolldata;

CREATE SCHEMA toll;

CREATE TABLE toll.tolldata(

row_id integer,

timestamp varchar(25),

vehicle_id integer,

vehicle_type varchar(10),

payment_type integer,

category_id varchar(10),

primary key (row_id)

);Here is part of the data file vehicle-data.csv

1,Thu Aug 19 21:54:38 2021,125094,car,2,VC965

2,Sat Jul 31 04:09:44 2021,174434,car,2,VC965

3,Sat Aug 14 17:19:04 2021,8538286,car,2,VC965

4,Mon Aug 2 18:23:45 2021,5521221,car,2,VC965

5,Thu Jul 29 22:44:20 2021,3267767,car,2,VC965

6,Sat Aug 14 03:57:47 2021,8411850,car,2,VC965

7,Thu Aug 12 03:41:22 2021,6064250,car,2,VC965- Once the bash script is executed all those files are downloaded and some are created

- Open PostgreSQL CLI

Create db from script

- Create db from setup.sql

- Run the setup.sql file

postgres=# postgres=# \i setup.sql

CREATE DATABASE

psql (14.13 (Ubuntu 14.13-0ubuntu0.22.04.1), server 13.2)

You are now connected to database "tolldata" as user "postgres".

CREATE SCHEMA

CREATE TABLE

tolldata-#It still didn’t load the data in the table

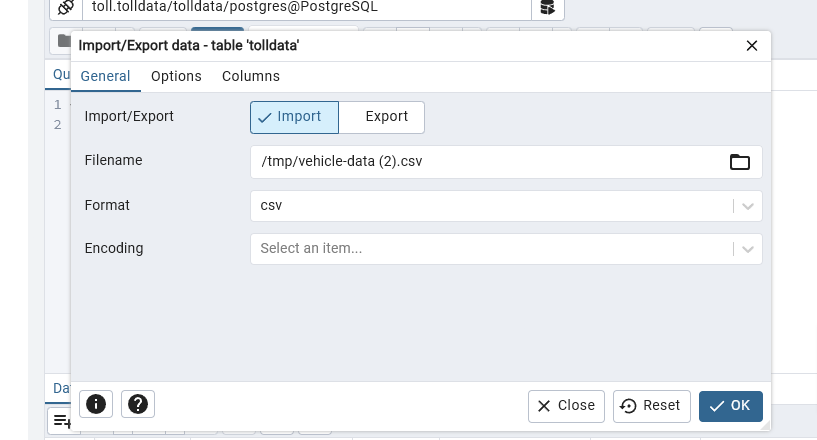

- Opened pgAdmin

- Left file tree > Expand PostgreSQL

- Scroll down to Database: tolldata

- Schemas > Expand > toll (table name)

- Scroll down to Tables > Scroll down to tolldata

- Right click > Import/Export Data

- Import

- Format csv

- Filename: Click on folder > either go to var/lib/pgadmin or choose the unlocked /tmp directory

- Choose the … dots on the right side

- Import > file vehicle-data.csv from local or from server

- Might have to wait till it populates the directory > Select or go back to the Import screen if it takes time, till the Select button is active.

- Select and wait till you receive a confirmation that data is loaded

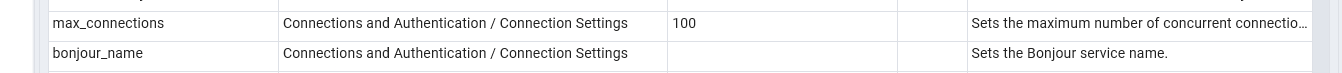

Task 1 - Max Connections

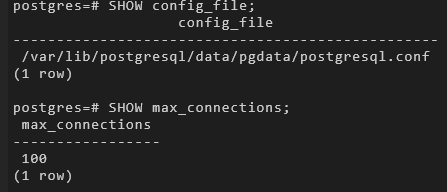

Using CLI

Find the configuration file: postgresql.conf

postgres=# SHOW config_file;

config_file

-------------------------------------------------

/var/lib/postgresql/data/pgdata/postgresql.conf

(1 row)

postgres=# SHOW max_connections;

max_connections

-----------------

100

(1 row)

From pgAdmin

Task 2 - User Management

Create User

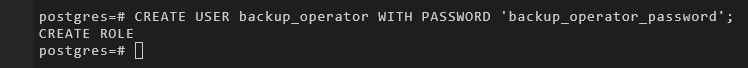

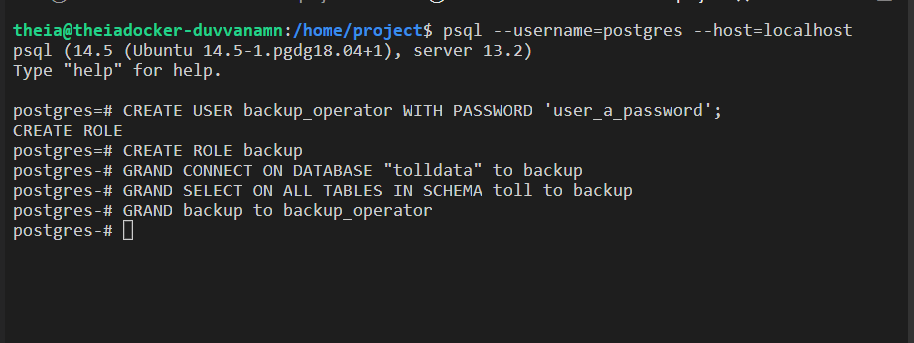

Create a user named backup_operator

postgres=# CREATE USER backup_operator WITH PASSWORD 'backup_operator_password';

CREATE ROLE

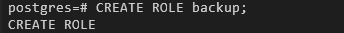

Create Role

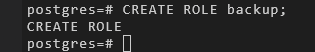

Create a role named backup

postgres=# CREATE ROLE backup;

CREATE ROLE

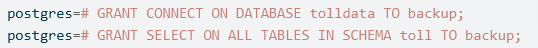

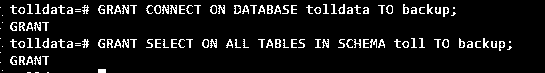

Grant Privileges to Role

Grant the following privileges to the role backup

- CONNECT ON tolldata DATABASE.

- SELECT ON ALL TABLES IN SCHEMA toll.

postgres=# GRANT CONNECT ON DATABASE tolldata TO backup;

postgres=# GRANT SELECT ON ALL TABLES IN SCHEMA toll TO backup;

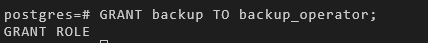

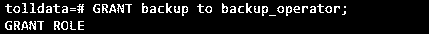

Grant Role to User

Grant the role backup to backup_operator

postgres=# GRANT backup TO backup_operator;

GRANT ROLE

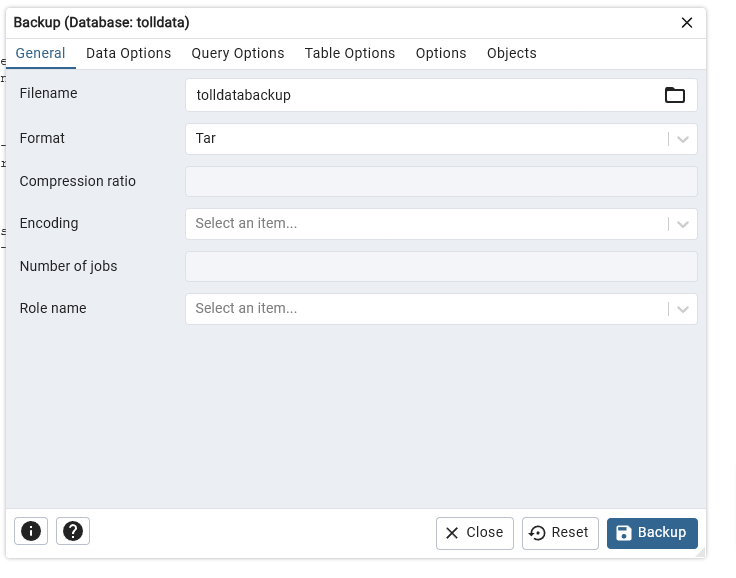

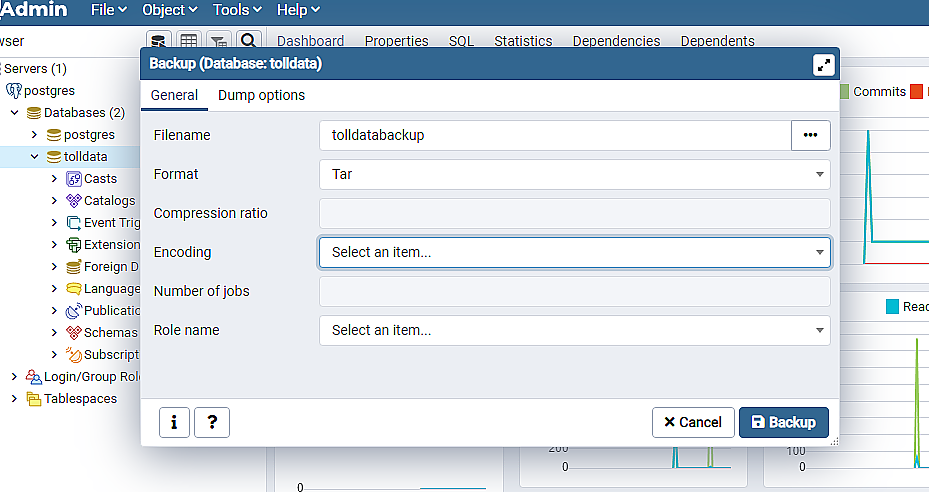

Task 3 - Backup

Backup the database using PGADMIN

Backup the database tolldata into a file named tolldatabackup.tar, select the backup format as Tar

- Open pgAdmin

- Scroll down to tolldata

- Right click on it and backup to a tar tolldatabackup.tar file

dfsdfa

dfds

Instead all of 1

The third from the bottom icon in the sidebar looks like a tree with two circles. Click that. Then click on "PostgreSQL" then click "Start."

Then run the following commands:

wget https://cf-courses-data.s3.us.cloud-object-storage.appdomain.cloud/IBM-DB0231EN-SkillsNetwork/labs/Final%20Assignment/postgres-setup.sh

chmod +x postgres-setup.sh

You can now proceed with the lab.

Admins, this is what you should be telling people posting here with this error, not "Try a different browser."

Making these labs work is sometimes more of a challenge than actually doing the labs themselves.

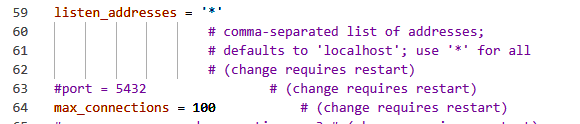

Edit: And I'll spare you the next obstacle; the first hint in 1.2 is incorrect. That file has moved from

/home/project/postgres/data/postgresql.conf

to

/home/project/postgres/data/pgdata/postgresql.conf