-- Drop the tables in case they exist

DROP TABLE IF EXIXTS BookShop;

DROP TABLE IF EXISTS BookShop_AuthorDetails;

-- Create the table

CREATE TABLE BookShop (

BOOK_ID VARCHAR(4) NOT NULL,

TITLE VARCHAR(100) NOT NULL,

AUTHOR_NAME VARCHAR(30) NOT NULL,

AUTHOR_BIO VARCHAR(250),

AUTHOR_ID INTEGER NOT NULL,

PUBLICATION_DATE DATE NOT NULL,

PRICE_USD DECIMAL(6,2) CHECK(Price_USD>0) NOT NULL

);

-- Insert sample data into the table

INSERT INTO BookShop VALUES

('B101', 'Introduction to Algorithms', 'Thomas H. Cormen', 'Thomas H. Cormen is the co-author of Introduction to Algorithms, along with Charles Leiserson, Ron Rivest, and Cliff Stein. He is a Full Professor of computer science at Dartmouth College and currently Chair of the Dartmouth College Writing Program.', 123 , '2001-09-01', 125),

('B201', 'Structure and Interpretation of Computer Programs', 'Harold Abelson', ' Harold Abelson, Ph.D., is Class of 1922 Professor of Computer Science and Engineering in the Department of Electrical Engineering and Computer Science at MIT and a fellow of the IEEE.', 456, '1996-07-25', 65.5),

('B301', 'Deep Learning', 'Ian Goodfellow', 'Ian J. Goodfellow is a researcher working in machine learning, currently employed at Apple Inc. as its director of machine learning in the Special Projects Group. He was previously employed as a research scientist at Google Brain.', 369, '2016-11-01', 82.7),

('B401', 'Algorithms Unlocked', 'Thomas H. Cormen', 'Thomas H. Cormen is the co-author of Introduction to Algorithms, along with Charles Leiserson, Ron Rivest, and Cliff Stein. He is a Full Professor of computer science at Dartmouth College and currently Chair of the Dartmouth College Writing Program.', 123, '2013-05-15', 36.5),

('B501', 'Machine Learning: A Probabilistic Perspective', 'Kevin P. Murphy', '', 157, '2012-08-24', 46);

-- Retrieve all records from the table

SELECT * FROM BookShop;Normalization

In databases you will inevitably have some inconsistencies and duplicate information. Such duplication can cause extra work and inconsistencies if the data is updated, because you must change it in more than one place.

Normalization is the process of organizing your data to reduce redundant data, often by dividing larger tables into multiple relatable tables.

- Normalization helps speed up transactions because you perform updates, additions, and deletes only once on a normalized database.

- It also improves data integrity because it reduces the chance of a change being made in one place but not in another.

As you begin the normalization process, it’s important to recognize that you focus on normalizing each table until you’ve reached the required normal form level.

Normalization usually results in creating more tables, and once all the tables are normalized, you will have a normalized database. There are several forms of normalization, and most data engineers will need to be familiar with the first normal form, second normal form, and third normal form.

1NF

For a table to be in the first normal form, each row must be unique and each cell must contain only a single value. First normal form is also called 1NF.

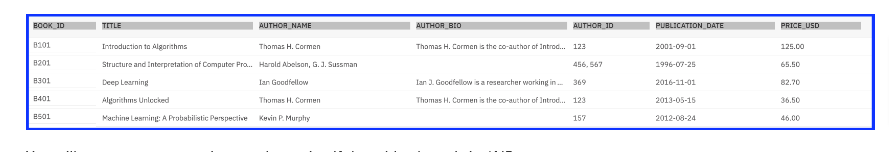

Here you see this table is not 1NF because some columns have multiple values

Normalize

Now you see it does pass the 1NF:

- Each cell in the table now has only one entry, and so

- the table is in the first normal form.

2NF

The database must already be in first normal form, which involves ensuring that every row in the table is unique, and that each cell contains only a single value. Second normal form specifies that you should separate groups of values that apply to multiple rows by creating new tables. Second normal form is also referred to as 2NF.

- If you look at the above table you’ll see that every column in the table is single or atomic valued, but

- it has multiple books by the same author.

- This means that the AUTHOR_ID, AUTHOR_NAME and AUTHOR_BIO details for BOOK_ID B101 and B401 are the same.

- As the number of rows in the table increase, you will be needlessly storing more and more occurrences of these same pieces of information.

- And if an author updates their bio, you must update all of these occurrences.

Normalize

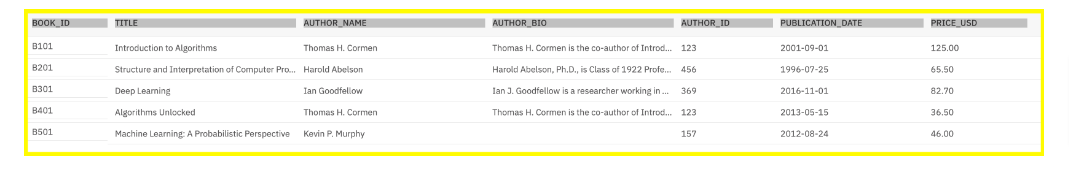

- In this scenario, to enforce 2NF you can take the author information such as AUTHOR_ID, AUTHOR_NAME and AUTHOR_BIO out of the BookShop table into another table

- A table named BookShop_AuthorDetails.

- You then link each book in the BookShop table to the relevant row in the BookShop_AuthorDetails table, using a unique common column such as AUTHOR_ID to link the tables.

- To create the new BookShop_AuthorDetails table:

CREATE TABLE BookShop_AuthorDetails

(AUTHOR_ID INTEGER NOT NULL,AUTHOR_NAME VARCHAR(30) NOT NULL,

AUTHOR_BIO VARCHAR(250),PRIMARY KEY (AUTHOR_ID)) ;

-----Insert the records of Bookshop to this table.

insert into BookShop_AuthorDetails select DISTINCT AUTHOR_ID, AUTHOR_NAME, AUTHOR_BIO FROM BookShop;

select * from BookShop_AuthorDetails;Now you are only storing the author information once per author and only have to update it in one place; reducing redundancy and increasing consistency of data. Thus 2NF is ensured.

3NF

The database must already be in the first and second normal forms to meet the requirements for the third normal form. Next, you must eliminate any columns that do not depend on the key.Third normal form is also referred to as 3NF.

Review the Keys section below and return here to finish.

Let’s consider some additional data about the book example above:

- the books

- the publisher

- and where the book ships from (it is not shown in the images above)

Each publisher ships books from warehouses in their own location.

So where the book ships from depends on the publisher, not the book ID.

Therefore, the book table is not in 3NF because the ships from data does not depend on the primary key.

To fulfill the criteria of the third normal form, 3NF, it is necessary to segregate the publisher and ship’s details into a dedicated publisher’s table.

All tables are now in third normal form, which is as far as I’m going to take it here.

Keys

In this section we will learn how to utilize a primary key to uniquely identify records in a table, use a foreign key to establish relationships between tables, and discern the relations between them.

Primary Key

By definition, a primary key is a column or group of columns that uniquely identify every row in a table. A table cannot have more than one primary key.

The rules for defining a primary key include:

- No two rows can have a duplicate primary key value.

- Every row must have a primary key value.

- No primary key field can be null.

Continue the example above: If you are not using Datasette tool, there are a multitude of ways to set a primary key in a table, and most all of them are easier than the process we’ll go through here.

- To uniquely identify every row in the BookShop and BookShop_AuthorDetails tables, you will create a primary key.

- Set the BOOK_ID column of the BookShop table and the AUTHOR_ID column of the BookShop_AuthorDetails table as primary keys for their respective tables.

- Both columns were declared as NOT NULL when the tables were created (verify this in the SQL script or table definition).

- The BookShop_AuthorDetails table inherits data types and column constraints, including NOT NULL, from the BookShop parent table).

Set Primary Key for BookShop table:

--Drop the table.

DROP TABLE IF EXISTS BookShop;

-----Recreate it with Primary Key -------

CREATE TABLE BookShop (

BOOK_ID VARCHAR(4) NOT NULL,

TITLE VARCHAR(100) NOT NULL,

AUTHOR_NAME VARCHAR(30) NOT NULL,

AUTHOR_BIO VARCHAR(250),

AUTHOR_ID INTEGER NOT NULL,

PUBLICATION_DATE DATE NOT NULL,

PRICE_USD DECIMAL(6,2) CHECK(Price_USD>0) NOT NULL,PRIMARY KEY (BOOK_ID));

INSERT INTO BookShop VALUES

('B101', 'Introduction to Algorithms', 'Thomas H. Cormen', 'Thomas H. Cormen is the co-author of Introduction to Algorithms, along with Charles Leiserson, Ron Rivest, and Cliff Stein. He is a Full Professor of computer science at Dartmouth College and currently Chair of the Dartmouth College Writing Program.', 123 , '2001-09-01', 125),

('B201', 'Structure and Interpretation of Computer Programs', 'Harold Abelson', 'Harold Abelson, Ph.D., is Class of 1922 Professor of Computer Science and Engineering in the Department of Electrical Engineering and Computer Science at MIT and a fellow of the IEEE.', 456, '1996-07-25', 65.5),

('B301', 'Deep Learning', 'Ian Goodfellow', 'Ian J. Goodfellow is a researcher working in machine learning, currently employed at Apple Inc. as its director of machine learning in the Special Projects Group. He was previously employed as a research scientist at Google Brain.', 369, '2016-11-01', 82.7),

('B401', 'Algorithms Unlocked', 'Thomas H. Cormen', 'Thomas H. Cormen is the co-author of Introduction to Algorithms, along with Charles Leiserson, Ron Rivest, and Cliff Stein. He is a Full Professor of computer science at Dartmouth College and currently Chair of the Dartmouth College Writing Program.', 123, '2013-05-15', 36.5),

('B501', 'Machine Learning: A Probabilistic Perspective', 'Kevin P. Murphy', '', 157, '2012-08-24', 46);

-- Retrieve all records from the table

SELECT * FROM BookShop;Set Primary Key AUTHOR_ID of the Bookshop_AuthorDetails table:

--Drop the table.

DROP TABLE IF EXISTS BookShop_AuthorDetails;

-----Rereate another table BookShop_AuthorDetails with author id as the primary key

CREATE TABLE BookShop_AuthorDetails(AUTHOR_ID INTEGER NOT NULL,AUTHOR_NAME VARCHAR(30) NOT NULL,AUTHOR_BIO VARCHAR(250),PRIMARY KEY (AUTHOR_ID)) ;

-----Insert the records of Bookshop to this table.

insert into BookShop_AuthorDetails select DISTINCT AUTHOR_ID, AUTHOR_NAME, AUTHOR_BIO FROM BookShop;

select * from BookShop_AuthorDetails;Foreign Key

A foreign key is a column that establishes a relationship between two tables. It acts as a cross-reference between two tables because it points to the primary key of another table. A table can have multiple foreign keys referencing primary keys of other tables.

Rules for defining a foreign key:

- A foreign key in the referencing table must match the structure and data type of the existing primary key in the referenced table

- A foreign key can only have values present in the referenced primary key

- Foreign keys do not need to be unique. Most often they are not.

- Foreign keys can be null.

To create a foreign key for the BookShop table, set its AUTHOR_ID column as a foreign key to establish a relationship between the BookShop and BookShop_AuthorDetails tables:

-----Foreign key addition in bookshop

DROP TABLE IF EXISTS BookShop;

CREATE TABLE BookShop (

BOOK_ID VARCHAR(4) NOT NULL,

TITLE VARCHAR(100) NOT NULL,

AUTHOR_NAME VARCHAR(30) NOT NULL,

AUTHOR_BIO VARCHAR(250),

AUTHOR_ID INTEGER NOT NULL,

PUBLICATION_DATE DATE NOT NULL,

PRICE_USD DECIMAL(6,2) CHECK(Price_USD>0) NOT NULL,PRIMARY KEY (BOOK_ID),

FOREIGN KEY (AUTHOR_ID)

REFERENCES BookShop_AuthorDetails(AUTHOR_ID)

ON UPDATE NO ACTION

ON DELETE NO ACTION);Constraints

Here we will review different kinds of relational model constraints crucial for maintaining data integrity in a relational data model.

Entity Integrity

Entity integrity ensures that no duplicate records exist within a table and that the column identifying each record within the table is not a duplicate and not null.

The existence of a primary key in both the BookShop and BookShop_AuthorDetails tables satisfies this integrity constraint because a primary key mandates NOT NULL constraint as well as ensuring that every row in the table has a value that uniquely denotes the row.

Referential Integrity

Referential integrity ensures the existence of a referenced value if a value of one column of a table references a value of another column.

The existence of the foreign Key (AUTHOR_ID) in the BookShop table satisfies this integrity constraint because a cross-reference relationship between the BookShop and BookShop_AuthorDetails tables exists. As a result of this relationship, each book in the BookShop table is linked to the relevant row in the BookShop_AuthorDetails table through the AUTHOR_ID columns.

Domain Integrity

Domain integrity ensures clarity of column purpose and consistency of valid values.

The BookShop table adheres to this constraint through the specification of data types, length, date format, check constraints, and null constraints in its CREATE statement. This comprehensive approach guarantees that the values in each column are not only valid but also conform to the specified domain constraints.